Introduction to Virtualization: A Comprehensive Guide for Beginners

This is a complete beginner's introduction to virtualization. Learn types of virtualization, its essential components and benefits.

Virtualization in today's time plays a critical role. From consumer level desktop usage to enterprise level cloud services, there are a variety of applicabilities.

This guide will help you to get started with virtualization in a comprehensive manner. This will give you enough fundamental knowledge to you as a student, engineer or even as a CTO to understand different types of virtualization and how it is used in the industry today.

This is a huge article so let me summarize what I am going to talk about in it.

- First part introduces to Host OS, Virtual Machines (VMs) and Hypervisors

- Second part describes essential components of virtualization: CPU, RAM, Network and Storage

- Third part throws light on the benefits of virtualization

Let's begin!

Virtual Machines, Hosts and Hypervisors

To have a better understanding of Virtual Machines, Hosts and Hypervisors, it is essential that you begin with the hardware fundamentals.

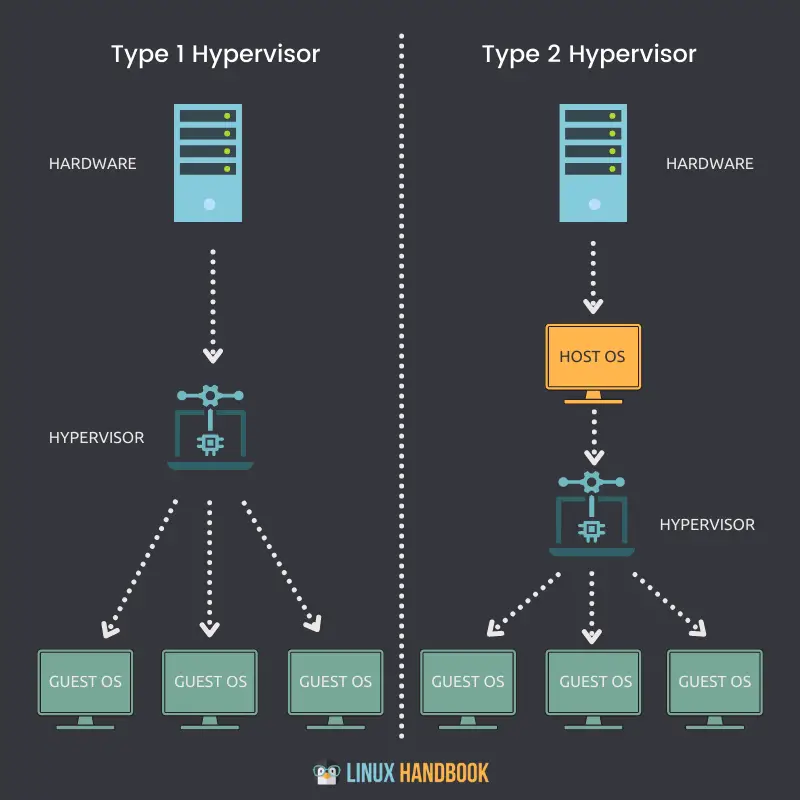

First, you require a physical machine/server that includes the following components that make up the entire system:

- Power Supply Unit (PSU)

- Motherboard

- Central Processing Unit (CPU)

- Random Access Memory (RAM)

- Network Interface Card (NIC)

- Storage - Hard Disk Drive (HDD) or Solid State Drive (SSD)

All these components are assembled together that synchronizes as a single computing unit becoming your very own personal computer (PC) or server. Wait, a personal server sounds interesting!

What is an Operating System(OS)?

An operating system is a system software that acts as an interface between the user and the computer to run a variety of applications, with or without a graphical user interface. One of these applications can be dedicated virtualization programs such as VirtualBox, for example.

What is a Hypervisor?

A hypervisor is a system software that acts as an intermediary between computer hardware and virtual machines. It is responsible for effectively allocating and harnessing hardware resources to be used by the respective virtual machines, that work individually on a physical host. For this reason, hypervisors are also called virtual machine managers.

This system software acts as an interface between the user and the computer with the sole purpose of virtualization, with or without a graphical user interface. An example for one such hypervisor is VMware FXI.

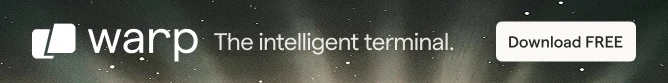

A hypervisor consists of three main modules:

Dispatcher — It constitutes the entry point of the monitor and reroutes the instructions issued by the virtual machine instance to either the allocator or interpreter modules described below.

Allocator — Whenever a virtual machine tries to execute an instruction that results in changing the associated machine resources, the allocator is invoked by the dispatcher, which then allocates the system resources to be provided to the virtual machine.

Interpreter — It consists of interpreter routines that are executed whenever a virtual machine executes a privileged instruction. This is also invoked by the dispatcher.

You must install either an operating system or a hypervisor that acts as an interface for you to interact with a physical host server/computer.

Let's assume it's Ubuntu Server where you can host or run various applications. These applications on the server run inside the operating system.

By utilizing the hardware, Ubuntu can control them and the amount of resources they have access to.

Therefore, an operating system or hypervisor becomes an intermediary between the applications and the hardware itself.

What is a Virtual Machine(VM)?

A virtual machine is a software that emulates all the functionalities of a physical server and runs on top of a host operating system or a hypervisor.

Therefore, you are not going to run applications on the physical system directly. They will be run on virtual machines, each with their own independent operating systems. In this manner, virtual machines can run different operating systems individually within the same physical system.

All such VMs can share a common set of physical hardware and even interact with each other over a virtual network, just like physical computers do.

You can have a bunch of virtual machines, each with their own independent operating system, sharing and utilizing the same physical components as mentioned earlier.

A hypervisor, or a virtualization software running on an operating system is actually what's in control of the physical resources.

A hypervisor has direct access to the physical hardware and it controls which resources virtual machines get access to.

That includes:

- How much memory (RAM) is allocated

- How much physical CPU access they get

- How do they access their network

- How do they access their storage

As more virtual machines are created on an OS virtualization software (let's cut it down to OSVS) or Hypervisor, they're also sharing that exact same set of physical resources.

Therefore, the OSVS or Hypervisor controls:

- How resources are shared to the individual virtual machines

- How resources are presented to the individual virtual machines

Types of Hypervisors

Let’s now take a look at the types of hypervisors and how they differ from each other.

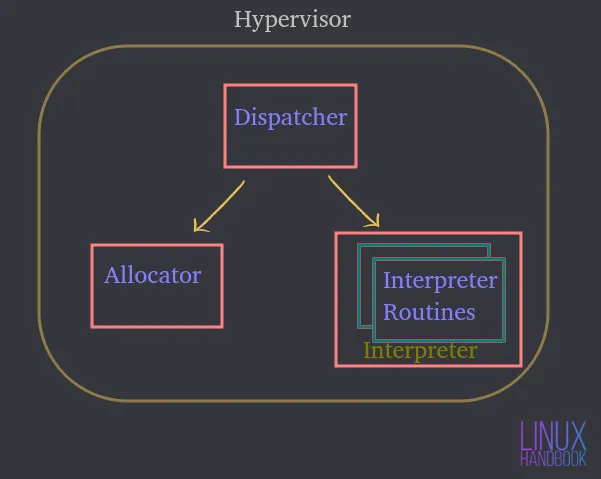

Type 1 Hypervisor

A hypervisor that can be natively installed and run directly on a physical host is called a Type 1 hypervisor.

Key pointers

- A Type 1 hypervisor can be directly installed on a bare-metal system or physical host.

- It does not require an operating system(OS) to be installed or available first, in order to deploy itself on a server.

- Direct access to CPU, Memory, Network, Physical storage.

- Hardware utilization is more efficient, delivering the best performance.

- Better security because of an absence of any extra layer for hardware access.

- Each type 1 hypervisor always requires a dedicated physical machine.

- Can cost more and suitable more for enterprise grade solutions.

- VMware ESXi, Citrix Hypervisor and Microsoft Hyper-V are some examples of Type 1 Hypervisors.

Type 2 Hypervisor

A hypervisor that cannot be natively installed and requires an operating system to run on a physical host is called a Type 2 hypervisor.

Key pointers

- A Type 2 hypervisor cannot be directly installed on a bare-metal system or physical host.

- It requires an operating system to be installed or available first, in order to deploy itself.

- Indirect access to CPU, Memory, Network, Physical storage.

- Because of an extra layer(OS) to access resources, hardware utilization can be less efficient and lag in performance.

- Potential security risks because of the availability of the host operating system.

- Each type 2 hypervisor does not require a dedicated physical machine. There can be many on a single host.

- Can cost less and suitable more for small business solutions.

- VMware Workstation Player, VMware Workstation Pro and VirtualBox are some examples of Type 2 Hypervisors.

Virtual Machine files and live state

Let us now understand the files that make up our virtual machines and how they leverage shared storage.

A virtual machine leverages the memory, CPU, network and storage hardware of our physical host. How is it being done?

Through the hypervisor.

When a virtual machine is running, it has certain information in memory. This information is part of the live state of the VM.

So the VM is actually operational on the host. Whenever you play a video or open a web browser on the VM, those runtime operations are occurring in memory on the VM. But these are actually all occurring on the physical host. This is the live state of any virtual machine.

The live state of the virtual machine processes all runtime executions on the CPU which is why it happens on the host.

When you open a browser to load a website, network bandwidth is traversed through the NIC which is also part of the physical host. It's all part of the live state of a VM that utilizes storage adapters to send data traffic towards a storage device. That again, happens live in real time on the host.

A virtual machine does not have any physical hard disks. If you're running Linux on a virtual machine, it needs the ability to read and write data to and from a physical drive.

This means that access to a physical disk is necessary. The VM is reading to and from and running on a virtually allocated drive. But the operations are actually being redirected towards a physical storage device somewhere. It could be across a fibre channel network, an ethernet network, or the local disk itself - right inside the Hypervisor or Operating System Virtualization Software.

Virtual machines have to use a set of files for virtualization management. One of these files represent a virtually allocated drive or virtual disk. A VM needs to read from or write to the virtual disk through these files. The data traffic is going to flow through a storage adapter to make that happen.

For obvious reasons, there could be many of such files corresponding to other virtual machines that are stored in the same physical location on the hard disk.

Let's now talk about how virtual machines shutdown and boot back up.

To be able to do that, the VM needs configuration information which primarily comprises of the live state:

- How much memory is the VM supposed to get?

- How much CPU is it supposed to get?

- What is the allocated disk size?

- How would the VM access the network?

Once again, all of this information is stored on a file.

Let me enlist all the types of information virtual machine files store to ensure their full functionality:

- Static or dynamic storage

- Configuration information

- BIOS information

- Snapshots taken

So a virtual machine stores two kinds of states:

Live State

The live state is what's happening in real time which as I just discussed could be:

- How much current memory is being used

- How much CPU is being used

- What current applications are being used

- How network bandwidth is being used or traversed

If the physical host or the virtual machine inside it fails, all of the above real-time information is going to be lost. It is similar to unplugging a computer.

Persistent State

The persistent state are the files of that virtual machine, that are permanently saved. That is made possible through:

- Storage files

- Configuration files

- Snapshot files

The files related to the persistent state make up the VM.

Like we humans cannot survive without proper nutrition, virtual machines too need to have proper allocation of resources consistently. Lack of these resources mean virtual machines will not perform at their best.

The four essentials without which VMs cannot achieve their best performance are:

- CPU

- RAM

- Network

- Storage

CPU Virtualization

How does a virtual machine get access to the CPU resources of the physical host?

The physical host has a physical CPU. Say, for example, we have a CPU with 4 physical cores. Now if you want to allocate 2 of these physical cores, when you start creating your virtual machines, you'll be allocating 2 virtual CPUs. That means that the virtual machine would have access to 2 physical processor cores from the main CPU by creating a VM with 2 virtual CPUs.

But, this allocation does not mean that other virtual machines cannot leverage those cores, which means, I can assign all 4 cores to another virtual machine. So conclusively, these resources can be shared by all virtual machines on the hypervisor or operating system virtualization software.

Depending upon the type of workload, you should be assigning the CPU cores based on the necessity of the virtual machines. If a VM is fine with 25% CPU utilization, you could be assigning the remaining resources to other VMs, of course.

Therefore, you must always strive to always rightsize your virtual machines based on application requirements, especially when it comes to CPU resources.

Memory virtualization

Let us now understand how virtual machines are able to utilize the memory resources of the hypervisor.

How much memory you can allocate to a virtual machine is once again based on the physical memory(RAM) you have on your physical server.

For example, if your server has 8 GB of RAM, you can allocate 4 GB and run a full-fledged Ubuntu Desktop on your virtual machine. The Ubuntu Desktop "thinks" that it has 4 GB of physical memory. But what actually happens is that this allocated memory is mapped from the actual 8 GB of physical memory itself.

When you assign 4 GB to the VM, it cannot use any more memory than that allocated amount.

Like CPUs, it once again does not mean that this 4 GB is dedicated to the VM at all times. If the applications running on this VM do not currently need this much of memory, another VM can make use of the same resource, as and when needed.

When an application runs on a VM, the operating system (on the VM) allocates the memory based on its own memory table, and when it is closed on a VM, the OS marks those memory pages as free. But since it is never "aware" about the presence of a hypervisor or virtualization software, it is never going to inform the physical server about this de-allocation.

Therefore, it is the job of the hypervisor or the virtualization software to keep looking inside the VM's operating system to monitor these allocations and assign unused portions of memory to other VMs from time to time.

In contrast to this shared aspect of memory allocation among virtual machines, you can also enforce memory reservation for specific VMs to use a specific portion of the physical memory. This means that other VMs cannot use the reserved memory even if it is not under use by the memory-reserved VM. In general practice, this procedure is best to be avoided, because sharing memory ensures effective utilization of resources at the end of the day.

You can relate this to shared and dedicated servers that are provided via Linode. It is more economic to shared servers in day to day sysadmin practice and production.

Network Virtualization

An Ubuntu VM runs on a host on a hypervisor or a virtualization software. This virtual machine has what we call a virtual NIC V-NIC. That expands to virtual network interface card.

The operating system (Ubuntu) doesn't know that it's running within a virtual machine. So it expects to see all of the same kind of hardware that it would see if it was running on a physical server.

When it comes to network virtualization, you need to provide your virtual machine, a virtual network interface card.

Ubuntu is actually going to implement its drivers for a network interface card. It is going to have no idea as the guest operating system that its not really a physical NIC.

It's a virtual NIC which is fake hardware presented to our virtual machine as if it was real. So if you have a physical server with a physical NIC, you would connect your ethernet cable from the ethernet port towards a physical switch.

If you've got a virtual machine with a virtual NIC, you're going to connect it to a port on a virtual switch, much like you would do it with a physical machine.

Therefore, inside our hypervisor or virtualization software, we're going to have something called the virtual switch. You can connect multiple virtual machines to this virtual switch, and they can communicate with each other.

As long as you put them on the same VLAN (virtual local area network), they're going to be able to communicate through the virtual switch just like they would be able to communicate through any other type of physical switch.

So if you have a single VM connected to a virtual switch, you can connect a second VM. This enables sending traffic from one VM to another directly within that virtual switch. This traffic never has to leave the host as long as those virtual machines are on the same VLAN. As long as those VMs are on the same VLAN, they can communicate and the traffic never has to traverse the physical network.

But, here is an important point. Some network traffic would have to traverse the physical network, and so the virtual switch is going to need an uplink.

Just like a physical switch has uplinks to a higher level physical switch or a physical router, our virtual switch also requires uplinks. These uplinks are the actual physical adapters on the host. These physical adapters are called P-NICs or physical NICs or VM NICs.

A physical network interface card or NIC is always integrated into our modern motherboards on our servers:

But if you want, you can also opt for a dedicated NIC, plugged into the motherboard for additional networking:

When you create a hypervisor on a host, that host has one or more network interface cards, just like any other server would.

Those physical network interface cards on a host, connect to a physical switch, and that physical switch is going to have connections to routers. This is the way it gets traffic out to the Internet.

Through this mechanism, it can also connect to other physical servers that are inside the same data center.

So, virtual machines can communicate with all of those components with or within the same physical server.

If the traffic needs to actually leave the host to communicate outside the host to hit a router and get to another VLAN or another network, that traffic is going to flow out of the VM.

Through the virtual switch, one of the virtual switches uplinks towards the physical network.

When that traffic arrives at the physical switch, it will do a MAC table look up (a record of unique physical addresses).

It will provide the appropriate port towards the appropriate destination where that packet needs to go. It could be headed towards the Internet or to a physical host on your own local network.

But this mechanism ensures the ability for all of your virtual machines to communicate with all of the devices on my physical network. This is what a virtual switch does.

The fundamental idea here is to trick the guest operating system on the VM into "thinking" that it's got a physical network interface card. So you supply the same drivers to Ubuntu and it'll think that it has an actual hardware device.

When the data packets are sent out, the traffic is actually getting fetched by the hypervisor, which points it towards the appropriate virtual switch as instructed.

Storage Virtualization

Now that you have learned about how VMs leverage CPUs, memory and network, let us proceed with the final resource: storage.

Let me reiterate again that the guest operating system has no idea that it's running within a virtual machine. A VM has no dedicated physical hardware and that includes a storage disk. The VM doesn't have a physical disk that's dedicated to it.

With networking, we had a virtual NIC.

With storage virtualization, we're going to have a virtual SCSI controller, if we think about the way a physical server works with internal hard drives. When an operating system needs to write some sort of data to disk, the operating system generates a SCSI command.

If it needs to read data from disk, it generates a SCSI command and it sends that command to the SCSI controller, which interfaces with physical disks. That's the way Ubuntu works with storage, it doesn't know that it's running within a virtual machine here.

So we need to maintain that illusion for the guest operating system for storage mechanisms as well.

So you provide Linux (running on the VM) a virtual SCSI controller. It will emulate as if it is running actual physical disks.

Therefore, when Ubuntu needs to carry out some sort of storage command, it's going to think I've got an actual SCSI controller.

When a storage command is sent to the SCSI controller, what actually happens is that the virtual SCSI controller (a virtual device), redirects them to the hypervisor. The virtual machine sends those storage commands through its virtual SCSI controller and they arrive at the hypervisor.

The hypervisor determines what happens to those storage commands from here. If the virtual machine has its own VMDK or virtual disk file on a local disk, it will simply send those storage commands to that file on your local hard drive.

Apart from this most basic functionality, it could also be

- a fibre channel storage array

- an Ethernet based, iSCSI storage array

- a fibre channel over Ethernet

But they all work in similar fashion.

What would differ is actually the network.

If the hypervisor sends that storage command to a fibre channel, that storage command will traverse that Fibre Channel network until it eventually arrives at the virtual disk.

Regardless of the hypervisor, your virtual machine is going to have a virtual disk.

That is how the SCSI commands get from your virtual machine to your virtual disk, regardless of where that virtual disk is located.

As an Ubuntu based virtual machine generates a SCSI command, it sends it to the virtual SCSI controller.

The hypervisor determines the appropriate location for that virtual disk and sends it out the storage adapter, and the storage command arrives at the data store.

These data traversal changes are actually written to the file that contains the virtual machine's virtual disk. It could be .VMX, .VMDK or any other virtual file type.

I hope by now you have understood the basics of network and storage virtualization. Let me highlight the efficiency & mobility benefits of virtualization and also how you can convert physical hosts to virtual machines.

Benefits of Virtualization

So far we've talked about how virtualization basically works through the various modes of resource management. Let's now talk about the many key benefits.

Consolidation

First, let's talk about efficiency.

If you compare your virtual infrastructure to a gymnasium, and some of you may have experienced this before.

If you're a gym goer around January 1st, the gym starts to get really busy.

During the normal part of the year, a gym with around 20 treadmills and 50 exercise machines would suffice.

But if you're a gym owner, you know that come January, there's going to be two to three times as many people and it would more productive to triple the size of your environment.

When all those people realize that, yeah, maybe they don't really like exercising so much, they stop using the gym.

Henceforth, you can downsize the gym as per your requirement.

Now this would also be really great if you could do the same thing with the hardware of your virtual data center.

When you deal with virtualization, it brings you to the idea of cloud computing, where there is a vendor or service provider like Linode, for example. With it you can spin up a bunch of temporary virtual machines on somebody else's hardware and accommodate that busy time of the year for your customers.

But you can't really get to cloud computing without virtualizing first. This is the first step along that path to where we can get to what we call a state of elasticity, where your infrastructure can rapidly grow or shrink.

Virtualization is a building block towards that kind of flexibility. It provides us with the ability to consolidate workloads.

Below, we see a data center of physical servers.

For best practice, every single one of these servers shouldn't be running a single operating system.

Think about how many more workloads you could accomplish if you were running 20 to 30 Linux instances on every single one of these servers instead of just one per server!

That, is the biggest benefit of virtualization. That's the benefit that initially drove this innovation to virtualize: consolidation.

Efficiency

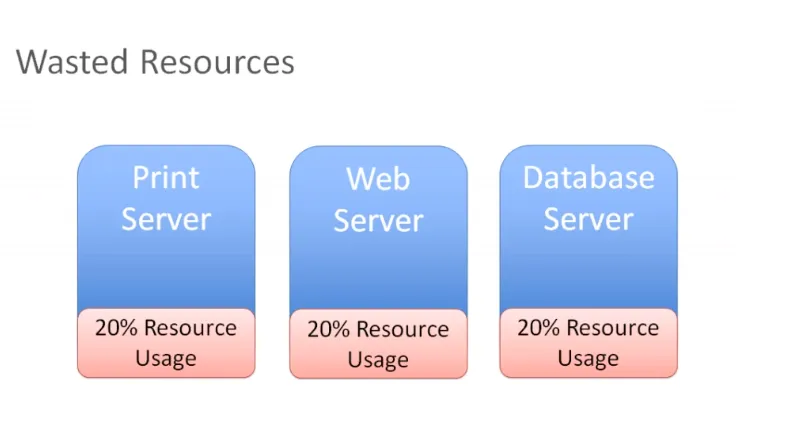

In the below diagram, you have three different servers with different roles that are available as individual physical systems in our environment:

You have a print server that's using 20 percent of all resources and the same goes for the web and database server.

These are physical servers that aren't really using all of their resources. If you think about it, you paid for all that hardware, and if you're only using 20 percent, you're wasting 80 percent.

By stranding resources on a physical host that can only run one operating system, you now have two choices to take care of this issue:

You could start installing other applications on our server and make multiple applications run on the same server. But if you need to reboot the print server, you are going to be concerned about what else is running on that print server?

What else can you impact by doing maintenance on my print server? There is going to be too many interdependent application use cases and scenarios on the same hardware, and that hugely brings down efficiency.

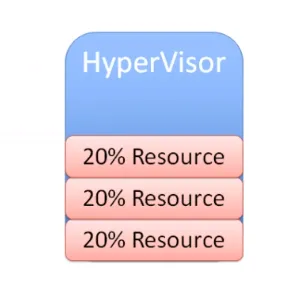

Therefore, most of the time, what we end up with is just resource waste. You can instead get rid of those physical servers and consolidate those workloads on a hypervisor:

Through this consolidation, you can now run multiple operating system instances on that hypervisor. Now you can very easily and efficiently maximize, resize and hit a sweet spot that could be 60 or 70 percent resource utilization.

So now you're efficiently using the resources that you've purchased, but you're not overburdening them.

That's our ultimate goal with virtualization: to maximize the investment in hardware that's manufactured without wasting extra capital on resources that is never going to be utilized.

Mobility

Another big benefit of virtualization is mobility.

If you can recall how network and storage virtualization works, think about a scenario here where you have a fibre channel storage array. Let's also consider that you've just invested on a new iSCSI storage array.

Now let's say you've got your Fibre Channel storage array and want to relocate this virtual machine and all of its files to your new iSCSI storage device, which is on a different network.

Say it's on the Ethernet network. You can now relocate easily with your hypervisor or virtualization software. As the SCSI commands come out of your VM, they are received by the hypervisor. When you use another storage adapter and relocate this VMS files to the other storage array, the hypervisor would be aware that you've made that change.

It would simply redirect those storage commands to the new location and the plus point is you can even do this without any downtime on your virtual machine.

Let's look at another scenario.

Say that you have multiple hosts and now choose to take a Linux VM and relocate the live state of the VM to another host. That is going to work as well, as long as the other host has the ability to connect to the shared storage. Your VM will still be able to access its virtual disk and all of the other critical VM files that it needs.

This is how storage, virtualization and shared storage help achieve mobility. You can take virtual machines, move them to different hosts, move their files from one storage system to another without any downtime.

It is all because of the hypervisor in the middle which interposes itself between the virtual machine and the hardware.

This is what is popularly known as decoupling. It means that the virtual machine doesn't have a direct relationship with any hardware. You can move it from one physical server to another. You can also move it from one storage system to another. There's no fixed relationship between virtual machine and specific hardware. They're decoupled in the true sense of the term.

Hence, as you can see, mobility is a major benefit of virtualization.

Another way to look at it:

Say you have multiple hosts and you've got many virtual machines running on all of these hosts and the workload isn't very balanced as desired.

If on host one, you have three virtual machines running on it, you may want to migrate some of those VMS to host two to equalize the workload on those two hosts.

You want to make sure that the resource utilization of our hosts is fairly evenly utilized at all times.

If host one is running really low on memory, you can move some VMs to some other host and ideally you'd want to do that without any downtime.

You can perform live migration and take a running VM and move it from one physical server to another with no downtime. That's one important reason you might want to migrate VMs.

Another reason is also when you need to perform any kind of maintenance activity. Say, you can install some physical memory and add another host or any other form of physical maintenance.

This means you are going to have downtime on a host. To prevent that, you can migrate all of the VMs to another host, perform maintenance, install memory or whatever you want to, say installing patches or carrying out a necessary reboot. You can migrate all VMs off of that host, do whatever you need to and then bring them back once the host is back up. All this can happen without any disruption for users.

How this is done:

Let's take a look at an example, migration, Say here we have a virtual machine running on host one you want to patch up. You also need to reboot this host.

So you have to initiate a migration of this VM from host one to host two.

But there are some prerequisites:

- Shared storage

- Virtual disk files

- Configuration files

- Virtual NICs

- Snapshots

This is a VM that has network access and that virtual neck is connected to a virtual switch and it's available on a VLAN.

The virtual switch is connected to a physical switch and the virtual machine is addressed accordingly.

This VM exists on some VLAN that's got a port group on the virtual switch. If you move this VM down to another host and the virtual switch there does not have a matching configuration, that network would become unavailable.

You don't want that.

So you must ensure you have a compatible network on both of these hosts.

Simply said, you don't want the migrated VM to see anything different when it moves the host two and you want the compatable processors you want as they were on host one.

If they're Intel on host one, you want them to be compatible with AMD on host two.

You want these two hosts to be as much identical as you can possibly make them.

When it comes to the network and storage configuration, they obviously need to match up.

Finally, you need a way to perform the transfer from host one to host two. So you're going to have a special network that takes all of the contents of memory, takes what's currently happening on the current VM and creates a copy of it on the other host.

Once you've covered my prerequisites, the rest of it is pretty straightforward.

You write a copy of the VM that is going to be created on a destination host in this case.

Based on VMware terminology, you're going to use something called a VM kernel port to create a network, and that network is going to be used to create a copy of the current VM on the destination host.

When that copy is complete, you will capture everything that's changed in that VM during that copy process. You call this a memory bitmap.

All of the contents of this memory bitmap are transferred over to the new copy of the VM and that becomes your live running virtual machine.

The downtime is so negligible, that it's not even perceptible to the users of your applications. This is called live migration of a virtual machine, while it's running and moved from one host to another.

This facilitates amazing flexibility, because you can move VMs wherever you need to.

Another notable advantage is automated load balancing.

You can group together hosts in what's called a cluster. Once you group those together, what you would now want is to allow your virtual machines to efficiently make use of the resources of the cluster as a whole.

You'll want to consider all of these hosts are interchangeable. It doesn't matter which host your VMs run on. If VMs need to move around to equalize workload, you can definitely make that to happen automatically.

That's the purpose of automatic load bouncing in VMware terminology.

This is also called distributed resource scheduler, in order to allow virtual machines to automatically be live migrated from host to host for the purposes of load balancing across themselves.

Converting physical servers to VMs

In this section, you'll learn about some concepts required to convert a physical machine to a virtual machine.

There are different software options out there to accomplish that.

Let's say you have a physical server with Ubuntu installed on it. It has all kinds of physical hardware like CPUs, RAM and network adapters and storage disks.

Our Ubuntu operating system has drivers for devices such as network adapters. It knows what kind of CPUs are being used: be it AMD or Intel CPUs. It has got a SCSI controller to connect to physical disks and the operating system is also aware of the actual physical hardware.

When we take this physical server and use one of our software tools to convert it to a virtual machine, the first step that happens is that virtual machine is created as virtual replica of the physical machine.

You're going to have a virtual machine present your hypervisor with the appropriate CPU, memory, network and storage specifications. It might not exactly match up with what we had in the physical server.

When you create a virtual machine, your goal should always be to rightsize that virtual machine. It's never to just take what the physical server had and duplicate it. The goal is always to right size it to give the virtual machine the correct resources that it needs to do its job (efficiently run applications) and nothing extra.

You'd want your resource consumption to be about 60, 70 percent utilization for CPU and the same for memory. You don't want them unutilized at 10 percent with four CPUs. That's not efficient.

You're also going to need a physical NIC, for the virtual machine and this is going to be a hardware change for the guest operating system.

So what would be most ideal to have is a network interface card that was specifically designed to run on a virtual machine, a good example of this is the VMware VMAX.

The beautiful thing about this, is that now the virtual machine has no relationship with actual physical hardware, so we get mobility by breaking out of specific hardware dependency everytime.

Other benefit that is often overlooked is the fact that as you virtualize, all of your OS instances(Linux/Mac/Windows) are going to have a similar set of virtual hardware. It allows you to standardize your operating system configuration.

You replace our physical hardware with virtual hardware and your physical hard disk with a virtual disk.

This is a file that could be on a local storage of the host, it could be on:

- a fibre channel

- a SCSI storage array

- an NFS server

What's most important is that you create this virtual disk and migrate all of the data from the source virtual machine to the virtual disk. When the power's on, it's got its new hardware with its new disk. From this point on, it should just work.

That's how you convert a physical server to a virtual machine.

Depending on which hypervisor you're using:

You can start using these solutions on your existing physical servers, convert them to virtual machines and run them on your hypervisor. Helder has shared some tips on building homelab which is a good way to experiment.

Hope you found this detailed introduction to virtualization helpful. Please leave any feedback if you have in the comment section below. Thank you.

DevOps Geek at Linux Handbook | Doctoral Researcher on GPU-based Bioinformatics & author of 'Hands-On GPU Computing with Python' | Strong Believer in the role of Linux & Decentralization in Science