Chapter 4: Terraform State and Providers

Dive deep into state files, provider configuration, remote state, and dangers of bad state handling.

How does Terraform remember what it created? How does it connect to AWS or Azure? Two concepts answer these questions: State (Terraform’s memory) and Providers (Terraform’s translators).

Without state and providers, Terraform would be useless. Let’s understand them.

What is Terraform State?

State is Terraform’s memory. After terraform apply, it stores what it created in terraform.tfstate.

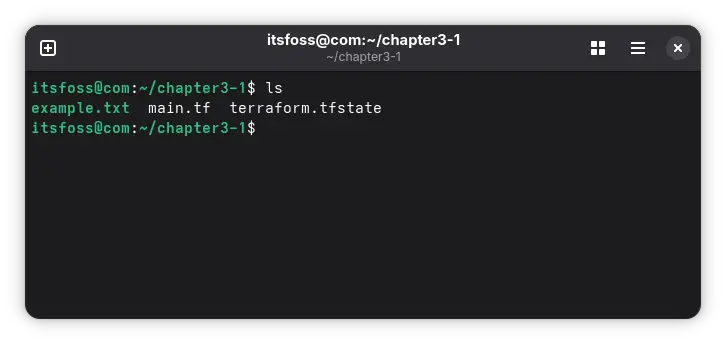

Run this example:

resource "local_file" "example" {

content = "Hello from Terraform!"

filename = "example.txt"

}

After terraform apply, check your folder – you’ll see example.txt and terraform.tfstate.

State answers three questions:

- What exists? – Resources Terraform created

- What changed? – Differences from your current config

- What to do? – Create, update, or delete?

Change the content and run terraform plan. Terraform compares the state with your new config and shows exactly what will change. That’s the power of state.

Local vs Remote State

Local state works for solo projects. But teams need remote state stored in shared locations (S3, Azure Storage, Terraform Cloud).

Remote state with S3:

terraform {

backend "s3" {

bucket = "my-terraform-state"

key = "terraform.tfstate"

region = "us-west-2"

dynamodb_table = "terraform-locks" # Enables locking

}

}

State locking prevents disasters when multiple people run Terraform simultaneously. Person A locks the state, Person B waits. Simple, but crucial for teams.

Backend Configuration

Backends tell Terraform where to store state. Local backend uses files on your computer. Remote backends use cloud storage.

Local backend (default):

# No configuration needed - stores terraform.tfstate locally

S3 backend (AWS):

terraform {

backend "s3" {

bucket = "my-terraform-state"

key = "prod/terraform.tfstate"

region = "us-west-2"

encrypt = true

dynamodb_table = "terraform-locks"

}

}

Azure backend:

terraform {

backend "azurerm" {

resource_group_name = "terraform-state"

storage_account_name = "tfstatestore"

container_name = "tfstate"

key = "prod.terraform.tfstate"

}

}

GCS backend (Google Cloud):

terraform {

backend "gcs" {

bucket = "my-terraform-state"

prefix = "prod"

}

}

Terraform Cloud:

terraform {

backend "remote" {

organization = "my-org"

workspaces {

name = "production"

}

}

}

Backend Initialization

After adding backend config, initialize:

terraform init

Terraform downloads backend provider and configures it. If state already exists locally, Terraform asks to migrate it to remote backend.

Migration example:

Initializing the backend...

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "local" backend to the

newly configured "s3" backend. No existing state was found in the newly

configured "s3" backend. Do you want to copy this state to the new "s3"

backend? Enter "yes" to copy and "no" to start with an empty state.

Enter a value: yes

Type yes and Terraform migrates your state.

Partial Backend Configuration

Don’t hardcode sensitive values. Use partial configuration:

backend.tf:

terraform {

backend "s3" {

# Dynamic values provided at init time

}

}

backend-config.hcl:

bucket = "my-terraform-state"

key = "prod/terraform.tfstate"

region = "us-west-2"

dynamodb_table = "terraform-locks"

Initialize with config:

terraform init -backend-config=backend-config.hcl

Or via CLI:

terraform init \

-backend-config="bucket=my-terraform-state" \

-backend-config="key=prod/terraform.tfstate" \

-backend-config="region=us-west-2"

Use case: Different backends per environment without changing code.

Changing Backends

Switching backends? Change config and re-run init:

terraform init -migrate-state

Terraform detects backend change and migrates state automatically.

Reconfigure without migration:

terraform init -reconfigure

Starts fresh, doesn’t migrate existing state.

Backend Best Practices

For S3: - Enable bucket versioning (rollback bad changes) - Enable encryption at rest - Use DynamoDB for state locking - Restrict bucket access with IAM

For teams: - Always use remote backends - Never use local backends in production - One state file per environment - Use separate AWS accounts for different environments

Example S3 setup:

# Create S3 bucket

aws s3api create-bucket \

--bucket my-terraform-state \

--region us-west-2

# Enable versioning

aws s3api put-bucket-versioning \

--bucket my-terraform-state \

--versioning-configuration Status=Enabled

# Create DynamoDB table for locking

aws dynamodb create-table \

--table-name terraform-locks \

--attribute-definitions AttributeName=LockID,AttributeType=S \

--key-schema AttributeName=LockID,KeyType=HASH \

--billing-mode PAY_PER_REQUEST

What Are Providers?

Providers are translators. They connect Terraform to services like AWS, Azure, Google Cloud, and 1,000+ others.

Basic AWS provider:

provider "aws" {

region = "us-west-2"

}

resource "aws_s3_bucket" "my_bucket" {

bucket = "my-unique-bucket-12345" # Must be globally unique

}

Authentication: Use AWS CLI (aws configure) or environment variables. Never hardcode credentials in your code.

Provider Requirements and Versions

Always specify provider versions to prevent surprises:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0" # 5.x but not 6.0

}

}

}

provider "aws" {

region = "us-west-2"

}

resource "random_string" "suffix" {

length = 6

special = false

upper = false

}

resource "aws_s3_bucket" "example" {

bucket = "my-bucket-${random_string.suffix.result}"

}

Version operators: = (exact), >= (minimum), ~> (pessimistic constraint).

Provider Aliases: Multiple Regions

Need the same provider with different configurations? Use aliases:

provider "aws" {

region = "us-west-2"

}

provider "aws" {

alias = "east"

region = "us-east-1"

}

resource "aws_s3_bucket" "west" {

bucket = "west-bucket-12345"

}

resource "aws_s3_bucket" "east" {

provider = aws.east

bucket = "east-bucket-12345"

}

This creates buckets in two different regions. Perfect for multi-region deployments or backups.

State Best Practices

Must do: - Add .tfstate to .gitignore (state files contain secrets) - Use remote state with encryption for teams - Enable state locking to prevent conflicts - Enable versioning on state storage (S3, etc.)

Never do: - Manually edit state files - Commit state to git - Ignore state locking errors - Delete state without backups

Essential State Commands

View state:

terraform state list # List all resources

terraform state show aws_s3_bucket.example # Show resource details

Modify state:

terraform state mv <old> <new> # Rename resource

terraform state rm <resource> # Remove from state

terraform import <resource> <id> # Import existing resource

Example - Renaming a resource:

# Change resource name in code, then:

terraform state mv aws_s3_bucket.old aws_s3_bucket.new

terraform plan # Should show "No changes"

Advanced State Management

Beyond basic commands, here’s what you need for real-world scenarios:

Pulling and Pushing State

Pull state to local file:

terraform state pull > backup.tfstate

Creates a backup. Useful before risky operations.

Push state from local file:

terraform state push backup.tfstate

Restore state from backup. Use with extreme caution.

Moving Resources Between Modules

Refactoring code? Move resources without recreating them:

# Moving to a module

terraform state mv aws_instance.web module.servers.aws_instance.web

# Moving from a module

terraform state mv module.servers.aws_instance.web aws_instance.web

Removing Resources Without Destroying

Remove from state but keep the actual resource:

terraform state rm aws_s3_bucket.keep_this

Use case: You created a resource with Terraform but now want to manage it manually. Remove it from state, and Terraform forgets about it.

Importing Existing Resources

Someone created resources manually? Import them into Terraform:

# Import an existing S3 bucket

terraform import aws_s3_bucket.imported my-existing-bucket

# Import an EC2 instance

terraform import aws_instance.imported i-1234567890abcdef0

Steps:

- Write the resource block in your code (without attributes)

- Run import command with resource address and actual ID

- Run terraform plan to see what attributes are missing

- Update your code to match the actual resource

- Run terraform plan again until it shows no changes

State Locking Details

When someone is running Terraform, the state is locked. If a lock gets stuck:

# Force unlock (dangerous!)

terraform force-unlock <lock-id>

Only use this if you’re absolutely sure no one else is running Terraform.

Replacing Providers

Migrating from one provider registry to another:

terraform state replace-provider registry.terraform.io/hashicorp/aws \

registry.example.com/hashicorp/aws

Useful when moving to private registries.

State Inspection Tricks

Show specific resource:

terraform state show aws_instance.web

Shows all attributes of a single resource.

Filter state list:

terraform state list | grep "aws_instance"

Find all EC2 instances in your state.

Count resources:

terraform state list | wc -l

How many resources does Terraform manage?

When Things Go Wrong

State out of sync with reality?

terraform refresh

# Or newer approach:

terraform apply -refresh-only

Corrupted state?

- Check your state backups (S3 versioning saves you here)

- Restore from backup using terraform state push

- Always test in a non-prod environment first

Conflicting states in team?

Enable state locking (DynamoDB with S3)

Use remote state, never local for teams - Implement CI/CD that runs Terraform centrally

Quick Reference

Backends:

# S3

terraform {

backend "s3" {

bucket = "my-state-bucket"

key = "terraform.tfstate"

region = "us-west-2"

dynamodb_table = "terraform-locks"

}

}

# Azure

terraform {

backend "azurerm" {

resource_group_name = "terraform-state"

storage_account_name = "tfstatestore"

container_name = "tfstate"

key = "terraform.tfstate"

}

}

terraform init # Initialize backend

terraform init -backend-config=file.hcl # Partial config

terraform init -migrate-state # Migrate to new backend

Providers:

# Single provider

provider "aws" {

region = "us-west-2"

}

# With version constraint

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

# Multiple regions with aliases

provider "aws" {

alias = "east"

region = "us-east-1"

}

resource "aws_s3_bucket" "east_bucket" {

provider = aws.east

bucket = "my-bucket"

}

Common Commands:

terraform state list # List resources

terraform state mv <old> <new> # Rename resource

terraform state rm <resource> # Remove from state

terraform import <res> <id> # Import existing resource

You now understand how Terraform remembers (state) and connects (providers). These two concepts are fundamental to everything else you’ll do with Terraform.

State and providers handle the “how” and “where” of Terraform. Now let’s explore the “what”—the actual infrastructure you create. In the next chapter, we’ll dive deep into resources, data sources, and the dependency system that makes Terraform intelligent about the order of operations.